Advanced Neural Network Settings

The neural networks in the toolbox have a fully configurable architecture. Almost any aspect of them can be changed unlike some other neural network software you may have tried. This section is recommended for advanced users only and shows how the neural network can be further changed beyond what is outlined in the intermediate section. The functions explained here do the same thing as those in the intermediate section but give a lot more control over the neural network topology. You only need to use one or the other not both.

The default range data is scaled too in the toolbox to between 0 and 1 because the default activation function in each layer can only output values between 0 and 1. So, if you scale your input and output data to between -1 and 1, the neural network will learn to produce poor results because it can never output data between -1 and 1 only 0 and 1. Technically scaling the data to between -1 and 1 gives the neural network the ability to learn better because the activation function can actually accept values between -1 and 1 it just can't output in that range. To solve this problem the only thing we need to do (to be able to use data that has been scaled to -1 and 1) is change the activation function in the output layer to a function that has an output range of -1 and 1. This section will describe functions that will enable you to do just that. The toolbox automatically descales the neural networks output back to the original range so you do not need to worry about that.

Caution should be used when changing these settings as they can increase neural network curve fitting. If you have only a small amount of training data, you are much better off sticking with the default settings that are proven to work. If, on the other hand, you have a lot of training data and monitor the neural networks performance on testing data (this is a recent new feature of the toolbox that you are strongly advised to use), changing the neural network architecture and changing the default data scale can significantly improve results and reduce the overall neural network size.

SetScalingAlgorithm(Algorithm) - The algorithm used for scaling data by default is the minimum and maximum scaling algorithm, which simply scales data down to a range that neural networks can use. To select this algorithm pass 1 as a parameter to the above function. The other scaling algorithm, called the mean and standard deviation, tries to filter out outliers and remove data that may be difficult for the neural network to learn. To select this algorithm pass 0 as a parameter to the above function.

SetInputScalingMinMax(Min, Max) - Sets the neural networks input data scaling range- maximum is 2 and minimum is -2. By default it is 0 and 1 due to the sigmoid activation function being the default in each layer.

SetOutputScalingMinMax (Min, Max) - Sets the neural networks output data scaling range- maximum is 2 and minimum is -2. By default it is 0 and 1 due to the sigmoid activation function being the default in each layer.

SetNetworkWithActivationLayer1...SetNetworkWithActivationLayer3(NeuronsInLayer1, ActivationInLayer1, NeuronsInLayer2, ActivationInLayer2, ..., OutputLayerActivation) - These three functions allow you to set how many neurons and hidden layers there are in the neural network along with the activation function in each layer. The last parameter in each function specifies the activation function for the output layer. You can pick from the following set of activation functions by entering it's corresponding number as the parameter:

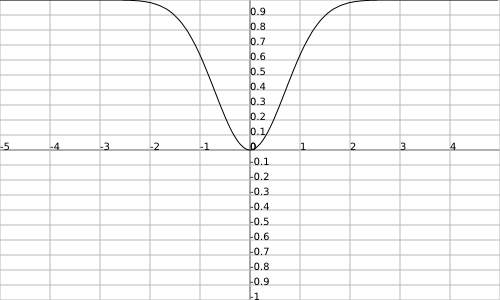

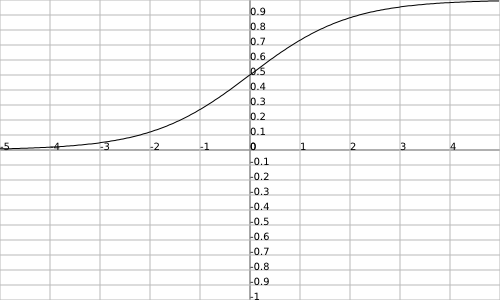

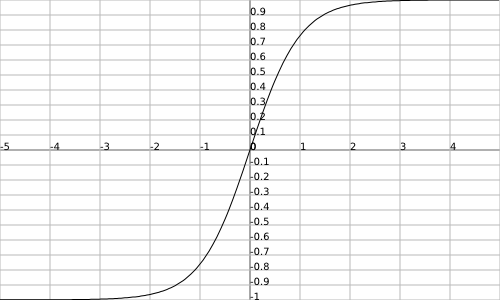

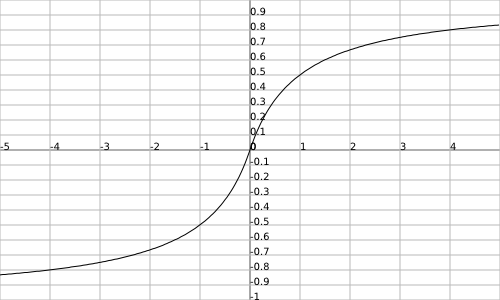

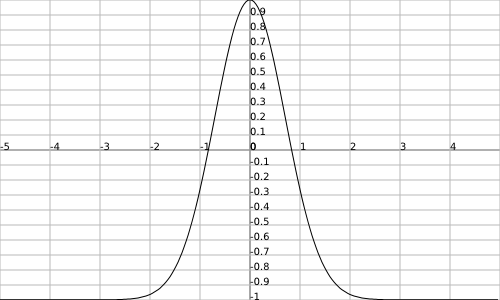

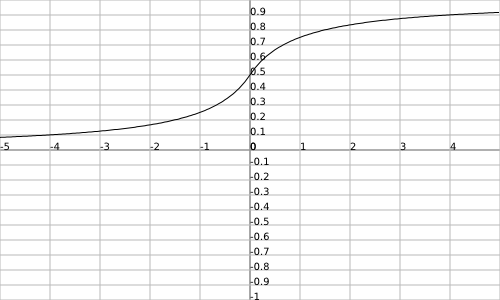

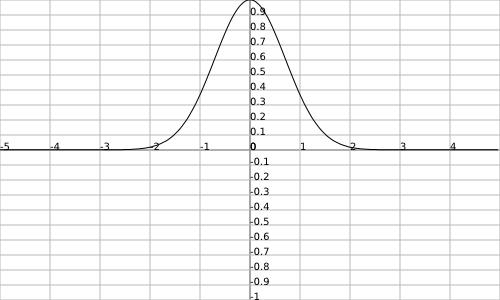

In each of the images of the activation functions below the x axis is your input and the y axis is the output.

Logistic - Number 2 - Activation function also know as sigmoid. Output range is between 0 and 1.

TanH - Number 3 - Activation function. Output range is between -1 and 1.

Symmetric Elliot - Number 4 - Activation function. Output range is between -1 and 1.

Symmetric Gaussian - Number 5 - Activation function. Output range is between -1 and 1.

Elliot - Number 6 - Activation function. Output range is between 0 and 1.

Gaussian - Number 7 - Activation function. Output range is between 0 and 1.

Inverse Gaussian - Number 8 - Activation function. Output range is between 0 and 1.