File Menu

New - starts a new project by closing the current window and returning to the neural network selection window.The save feature is very useful for large neural network projects and trying out different strategies.

Save - saves the current project

Open - opens an existing project

Close - closes the current window

Exit - exits the program

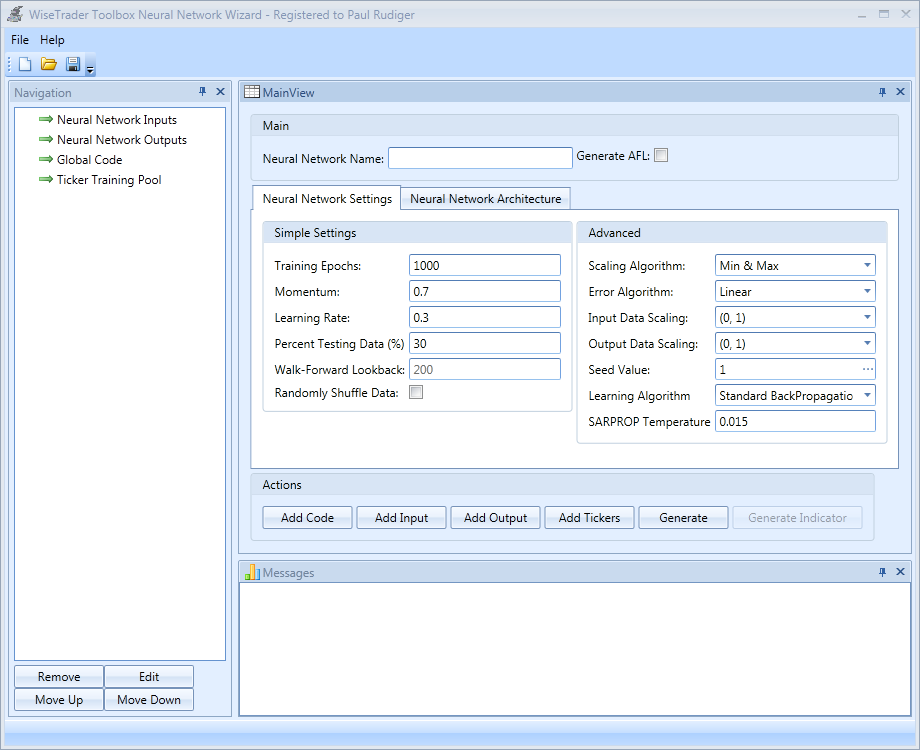

Starting:Before you commence it is important that you enter the neural network name. This will identify it uniquely amongst other neural networks you decide to make. Each name MUST be unique otherwise you may experience errors and blank screens or worse incorrect results in Amibroker!

Adding inputs/outputs: Each neural network you make will consist of a number of inputs (minimum 2) and 1 or more outputs (limited to one if you selected the Walk-Forward Neural Network). You can add inputs and outputs by clicking on Add Input and Add Output respectively.

Generating formulas: After adding your inputs and outputs you would probably stop there (if you are a beginner) and generate your training and indicator formulas by clicking 'Generate' . You will find more information about how to use the formula(s) generated under the 'Generate' topic. The default settings are more than adequate to get you started!

If your inputs and outputs need to use a function or you need to share a code between multiple inputs and outputs, you can specify this extra code by clicking Add Code.

Training on multiple stocks: If you selected the Standard Training Neural Network, you can also train on multiple stocks at once! By adding the ticker names via Add Tickers you can merge all the data together and train on it as a whole. There are some things that you need to observe to get the best results though. You should make sure that all the inputs and outputs are uniform (similar in range). Also Amibroker shrinks or expands data to have the same number of bars as the selected symbol so, if your currently selected symbol has only 500 bars and you want to train on multiple stocks each with 2000 bars, Amibroker will trim 1500 bars of each stock to make it the same as the currently selected one. So when training on multiple stock, you should pick them so they have around the same number of bars each and then select the one with the most bars of data and train from there.

If you selected the Generate AFL option, you can also generate the standalone version of your neural network indicator after it has been trained by clicking 'Generate Indicator'. This option is not available to Walk-Forward neural networks.

Simple Settings

Training Epochs - Sets the number of training runs for the neural network. The more epochs the better learned the neural network will be. But setting this value too high can lead to curve fitting so one should experiment to find the best number of epochs.

Momentum - The momentum of a neural network affects how fast the neural network trains. Setting this value too large can affect performance because it can cause the neural network to jump over the optimum point but it can also speed up convergence.

Learning Rate - Learning rate is affected by momentum and by default is 0.1 but can be much lower to increase the neural network sensitivity or much higher. Setting this value too high can make the neural network jump over the optimum result just like momentum.

Percent Testing Data - Tells the neural network training algorithm to set aside a certain percent of data purely for testing the NN performance. The performance on the training and testing data sets will be displayed as training progresses. If the MSE for the training set keeps improving while the MSE on the testing set increases a log, then you may have a serious curve fitting problem. In some situations the MSE on the testing set can worsen but then after a while can significantly improve.

Randomly Shuffle Data - Randomly shuffles all the data. The most recent data on the old data become mixed.

Advanced Settings

Error Algorithm - Sets the neural network error training algorithm. This is the algorithm that compares your desired output with the actual neural network output and determines what the error is. There are currently two options. The Linear algorithm simply subtracts the desired output with the actual neural network output. The TanH algorithm treats small errors as less significant and large errors as more significant.

Seed - When a neural network is created, it is randomly initialized using this seed value. The default seed value is 1 but that can be changed by entering a new integer value or simply press the button next to the box to randomly pick a new value. Sometimes a neural network can get stuck in local minima when training so changing the seed can sometimes help improve the results.

Scaling Algorithm - The algorithm used for scaling data by default is the minimum and maximum scaling algorithm, which simply scales data down to a range that neural networks can use. The other scaling algorithm, called the mean and standard deviation , tries to filter out outliers and remove data that may be difficult for the neural network to learn. The recommended algorithm is Min & Max unless your data is very noisy.

Input Data Scaling - Sets the neural networks minimum and maximum target input data scaling range. By default it is 0 to 1 due to the sigmoid activation function being the default in each layer.

Output Data Scaling - Sets the neural networks minimum and maximum target output data scaling range. By default it is 0 to 1 due to the sigmoid activation function being the default in each layer. If you change the output layers activation function to one that has a different range, you should change the scaling range to match.

Learning Algorithm - Refer to SetLearningAlgorithm in the WiseTrader Toolbox Documentation.

Neural Network Architecture

This is an advanced section of the Wizard that allows you to create custom types of neural networks. The top of the list is the output layer and the bottom of the list is the input layer.

When specifying the architecture, you also have the option of specifying the output layers activation function. When specifying the output activation, the number of neurons is ignored because it is specified by the number of outputs in the neural network. Your neural network architecture needs at least 1 hidden layer otherwise the default will be used which is 10 neurons with the sigmoid (Logistic) activation function. The bottom of the list represents the layers closest to the input and the layers at the top is the output activation or the layers closest to the output layer.